By Iqra Sharjeel

Based on : ChatGPT in medicine: prospects and challenges: a review article

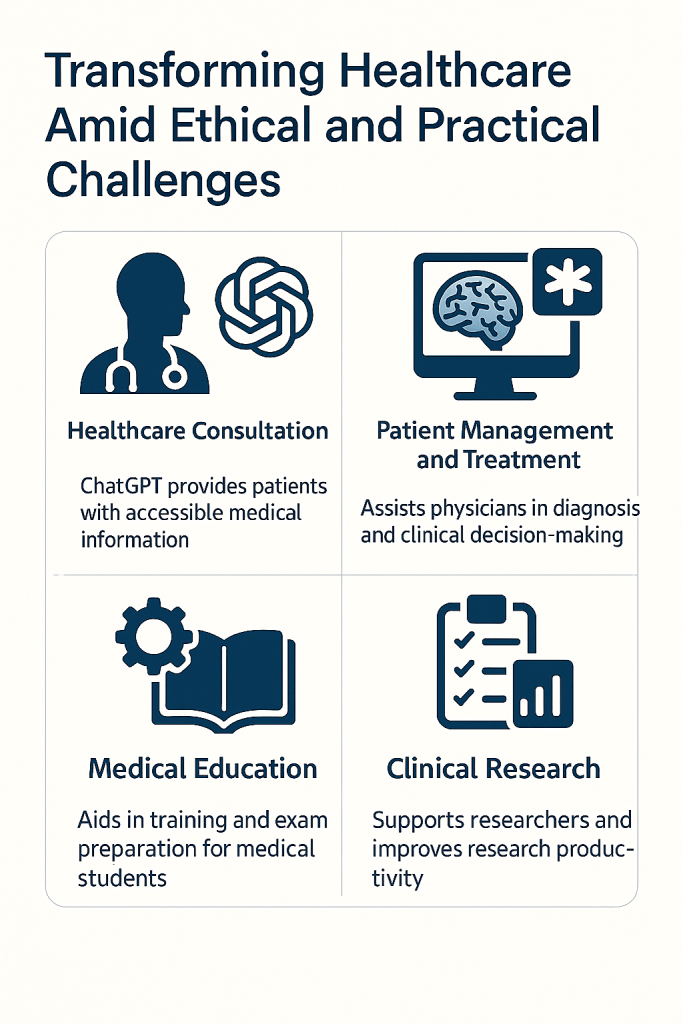

Since the release of ChatGPT by OpenAI in November 2022, the tool has rapidly gained prominence for its ability to generate human-like text based on natural language prompts. In the medical field, this generative AI model has drawn both excitement and criticism. Its vast capabilities suggest significant potential to transform healthcare delivery, education, and research. However, alongside these possibilities come serious concerns about ethics, accuracy, authorship, and patient safety. This review article by Tan, Xin, and Wu offers a balanced perspective on both the opportunities and the limitations of integrating ChatGPT into modern medicine.

Healthcare Consultation

One of the most promising applications of ChatGPT in medicine is its ability to enhance healthcare consultation. By rapidly processing and summarizing large volumes of data, ChatGPT can provide patients with accessible information about various medical conditions even before they see a physician. Studies show that its responses to questions about liver transplantation and breast cancer, for instance, were often rated “very good” or “excellent” by medical experts. Compared to traditional search engines like Google, ChatGPT tends to generate more detailed, specific, and empathetic responses. In particular, its ability to simulate empathetic human conversation makes it more relatable and reassuring to patients. It also proves useful in the realm of mental health by reducing stigma, offering private interactions, and assisting in emotion recognition and description. This function is especially valuable in settings where access to mental health professionals is limited or stigmatized. Additionally, ChatGPT can assist in travel medicine by providing timely disease outbreak alerts, personalized vaccine recommendations, and general health advice for travelers.

Patient Management and Treatment

Beyond patient education, ChatGPT has demonstrated strong potential in assisting physicians with diagnosis, treatment, and patient management. For example, in formal medical assessments such as the dermatology specialty certificate exam, ChatGPT achieved an 80% accuracy rate—well above the passing threshold. In managing complex conditions like fungal infections or cases requiring multidisciplinary treatment plans, its responses have shown a high level of concordance with expert recommendations. ChatGPT can process patient records, clinical guidelines, and current literature to generate summaries that can support medical decision-making and enable more personalized care plans. Additionally, ChatGPT’s image-processing capabilities are being explored to assist in the diagnosis of conditions such as retinopathies and skin lesions, and in surgical oncology to evaluate tumor locations and plan procedures. It can even generate predictive visualizations for post-surgical outcomes in plastic surgery, helping improve doctor–patient communication. In emergency settings, ChatGPT may also function as a triage chatbot to help prioritize care based on urgency. Finally, its ability to summarize and extract key information from clinical documents can dramatically reduce the administrative burden on healthcare providers by helping them write medical notes and surgical reports more efficiently.

Medical Education

ChatGPT is becoming an increasingly valuable educational tool, especially for medical students and early-career clinicians. Acting as a virtual tutor, it offers tailored learning experiences that adapt to individual learning styles and speeds. It has been successfully integrated into public health courses and bioinformatics training programs, where it helps students understand complex topics, write code, and debug programming tasks. ChatGPT has also been used to enrich veterinary and anatomical education, delivering detailed anatomical descriptions and facilitating case-based learning. Medical students preparing for licensing exams can use it to review complex topics, simulate clinical scenarios, and generate high-quality practice questions. For surgical trainees, it can help visualize and understand procedural steps, bridging the gap between theoretical knowledge and hands-on experience. The accessibility and consistency of AI-generated materials ensure that learners across different regions and institutions have access to high-quality resources, thus helping standardize medical education globally.

Clinical Research

In research, ChatGPT aids in generating innovative research questions and improving efficiency. It has been used to develop study ideas in fields like dysphagia, which were later evaluated as feasible and clinically relevant by experts. Its summarization capabilities help researchers stay updated with the latest findings and trends. Moreover, ChatGPT supports data-intensive tasks, such as analyzing gene expression data and predicting gene functions, as shown in the development of tools like GenePT. It also facilitates the identification of molecular interactions and potential drug targets. In academic writing, ChatGPT has proven effective in producing structured and logical text, outperforming human writers in certain evaluative tests. For non-native English speakers, it serves as an advanced writing assistant by correcting grammar and suggesting better vocabulary usage. It has also demonstrated competency in editing and proofreading, identifying errors and offering revisions comparable to professional editors.

Research Fraud and Ethical Concerns

Despite its capabilities, ChatGPT raises several ethical and practical concerns, particularly around academic misconduct. The ability to generate text rapidly and convincingly opens the door to plagiarism, ghostwriting, and the creation of false data or “fabricated” studies. AI-generated writing is difficult to detect, and many current detection tools perform poorly. In some cases, these tools even mistake human-written content for AI-generated text. Furthermore, increased reliance on ChatGPT may lead to a decline in original thinking and critical analysis among researchers. There is also a controversial debate about whether ChatGPT should be credited as a co-author on scientific publications. Although it has been listed as an author in some early papers, most journals and academic bodies reject this practice, citing its inability to take responsibility for content. According to ICMJE guidelines, authorship requires accountability—something ChatGPT cannot fulfill. As such, many journals have instituted strict policies prohibiting AI-generated content without appropriate attribution or oversight.

AI Hallucination and Misinformation

One of the most serious technical issues with ChatGPT is its tendency to produce “AI hallucinations”—fabricated or misleading information presented as fact. This is particularly dangerous in the medical domain, where accuracy is critical. Studies have shown that a significant portion of references generated by ChatGPT are incorrect, partially fabricated, or do not exist at all. Even though newer versions like GPT-4.0 perform better than earlier iterations, they still generate a troubling percentage of false citations. These fabricated references often appear legitimate, with real-sounding author names and plausible journal titles, which can mislead researchers into citing unreliable or nonexistent studies. Such hallucinations, if unrecognized, can distort scientific conclusions and undermine trust in research findings. Therefore, any content or citation generated by ChatGPT must be independently verified before use in academic or clinical contexts.

Limitations in Clinical Practice and Patient Safety

Despite ChatGPT’s success in non-clinical domains, its implementation in real-world medical practice is fraught with risk. In clinical care, where decisions affect patient lives, even a minor error can have severe consequences. ChatGPT’s responses are not always reproducible and may vary with slight changes in input phrasing. Moreover, because its responses often lack regional specificity or detailed clinical judgment, its utility in guiding actual patient care remains limited. In studies assessing ChatGPT’s role in clinical scenarios, the AI could offer general therapeutic suggestions but failed to provide nuanced or context-specific insights needed for real-world decisions. To truly benefit from AI in clinical environments, systems like ChatGPT would need access to sensitive patient data. However, this raises major concerns about data privacy, security, and regulatory compliance. At present, there is no legal framework to clearly define accountability or ethical responsibility in the use of generative AI in medicine, making the widespread adoption of such tools in clinical settings premature.

Conclusion

The article concludes by acknowledging the revolutionary potential of ChatGPT to reshape healthcare, particularly in medical education and research. The technology has already demonstrated substantial benefits in improving efficiency, reducing workloads, and enhancing access to information. However, the review emphasizes that ChatGPT should not be seen as a substitute for trained healthcare professionals, especially in clinical practice where precision and responsibility are critical. The medical community must proceed with caution, embracing the tools where they are safe—such as education—while demanding stronger regulations, clearer guidelines, and continued human oversight in more sensitive areas. Only with proper ethical safeguards can the promise of AI in medicine be realized without compromising patient safety or scientific integrity.

You must be logged in to post a comment.